Deep Dive into Diffusion Models

What is the Diffusion Models?

Diffusion Model is know

Diffusion Models in nutshell

Let’s first create a very simple diffusion model based on MNIST data. (Full code is available here)

The central idea is to take each training image and to corrupt it using a multi-step noise process to transform it into a sample from Gaussian distribution. A deep neural network is then trained to invert this process, and once trained the network can then generate new images starting with samples from Gaussian as input

Deep LearningFoundations and Concepts

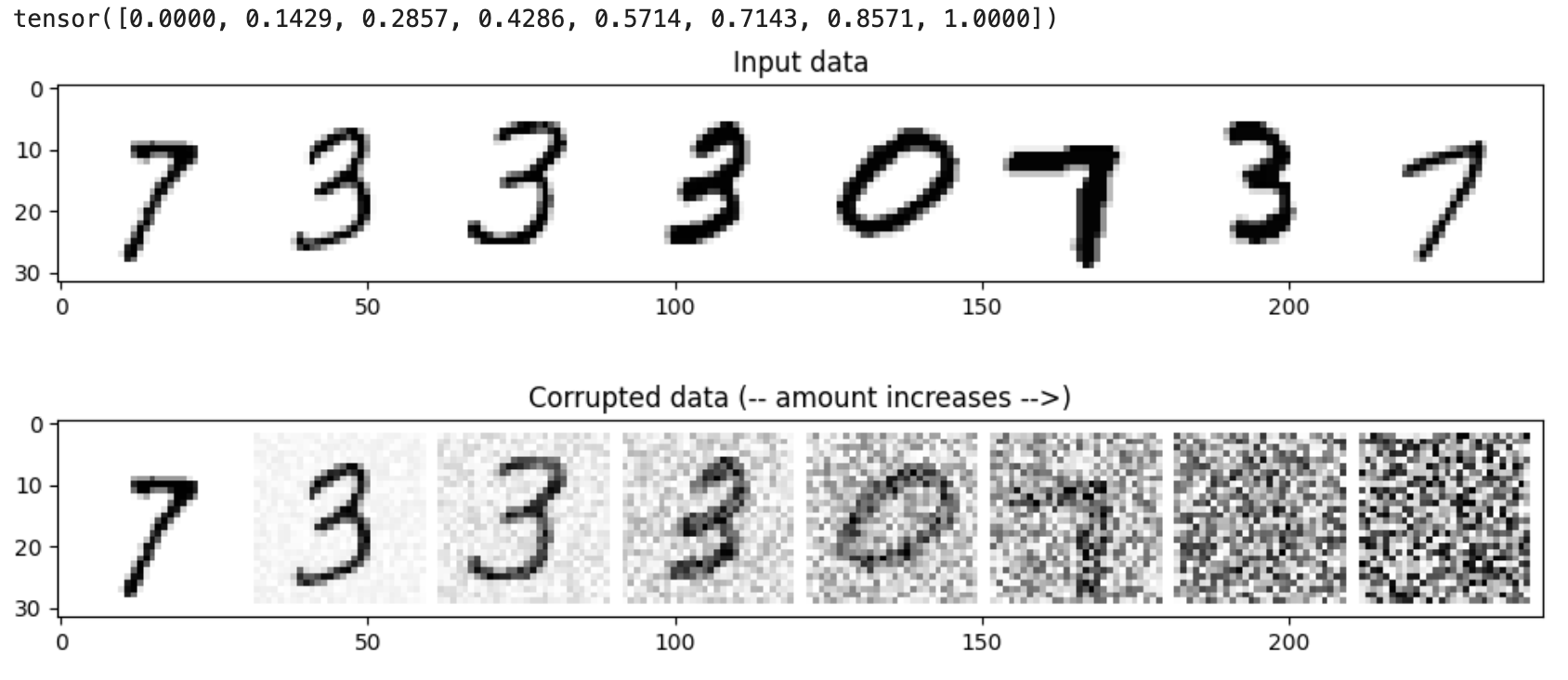

The corrupt process is defined as:

We take a batch of image in, and corrupt the image the according to different level of noise.

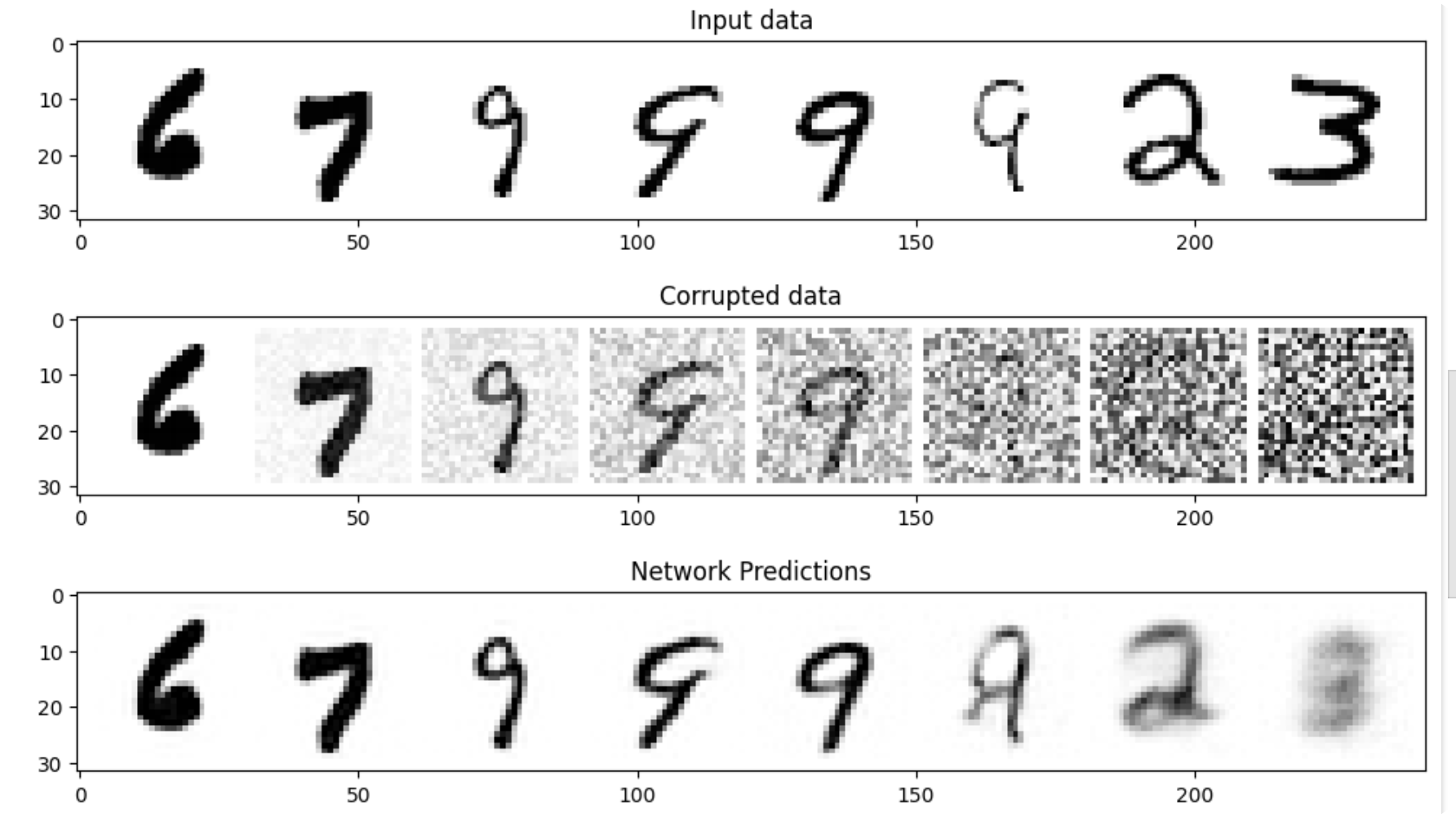

After we get images and corresponding corrupted images, we need to create a neural network to invert this process, which mean we need the neural network take the noise image in, get the de-noised(real) image out. And we want those two as close as possible. So, the loss function \(\mathcal{L}\) is:

\[ \mathcal{L}(\theta) = \sum_{i = 1}^{N}\| f_\theta(\hat{\mathrm{x}}_i) - \mathrm{x}_i \|^2 \tag{1}\]

which is the Mean Square Loss.

So, there are different types of neural network, which one should we choose? Since we need the output has same shape as the input, the U-Net(Ronneberger, Fischer, and Brox 2015) is perfect choice.

Now, we got everything we need to trained a neural network, data, model, loss function, let’s start training!!

Show the code

batch_size = 128

train_dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

epochs = 10

net = UNet() # This UNet is trained to predict the original image from the corrupted image

criterion = nn.MSELoss()

optimizer = torch.optim.Adam(net.parameters(), lr=1e-3)

for epoch in range(epochs):

for x, _ in tqdm(train_dataloader):

noise_amount = torch.rand(x.shape[0]) # Random Generate some noise level[0, 1] add to image x

noisy_x = corrupt(x, noise_amount) # Corrput the image

pred = net(noisy_x) # NN predict what the de-noised image x

loss = criterion(pred, x) # Compare

# Optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()After we trained the model for 10 epochs, we can see that it predict not-bad output.

Though, for the image with higher noise-level(more like Gaussian Distribution), the network perform well. One small trick we can use it to pass the image through model several times. We hope each time, the predicted image will get better.

Show the code

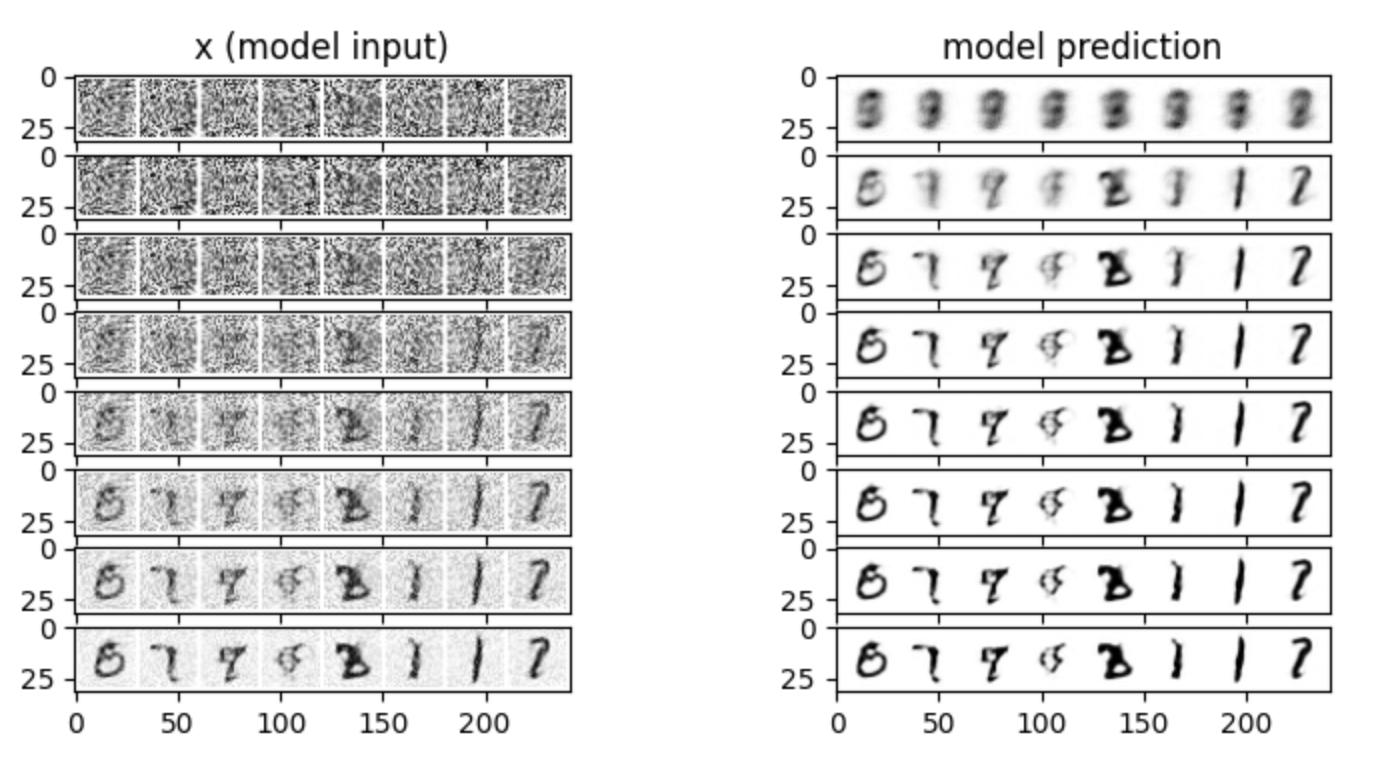

n_steps = 8

x = torch.rand(8, 1, 28, 28).to(device)

step_history = [x.detach().cpu()]

pred_output_history = []

for i in range(n_steps):

with torch.no_grad():

pred = net(x)

step_history.append(x.detach().cpu())

pred_output_history.append(pred.detach().cpu())

mix_factor = 1 / (n_steps - i)

x = x * (1 - mix_factor) + pred * mix_factorAfter we pass the model 8 times, we get the result:

It shows that the output truly get better each times.

We increase the number of steps, the result will get better. Below is how it look like after we pass the model 40 times.

Diffusion Model for Discrete Data

Recently, there are some good exploration for the diffusion model when apply on the. For example, Inception is the first commercial-scale diffusion language model. Which is is faster than the general language model.